Hi! I'm Zhen, currently a Gordon and Betty Moore Foundation Postdoctoral Fellow hosted at UC San Diego. My postdoc mentors are Profs. Eric Xing (CMU&MBZUAI) and Zhiting Hu (HDSI&CSE, UCSD).

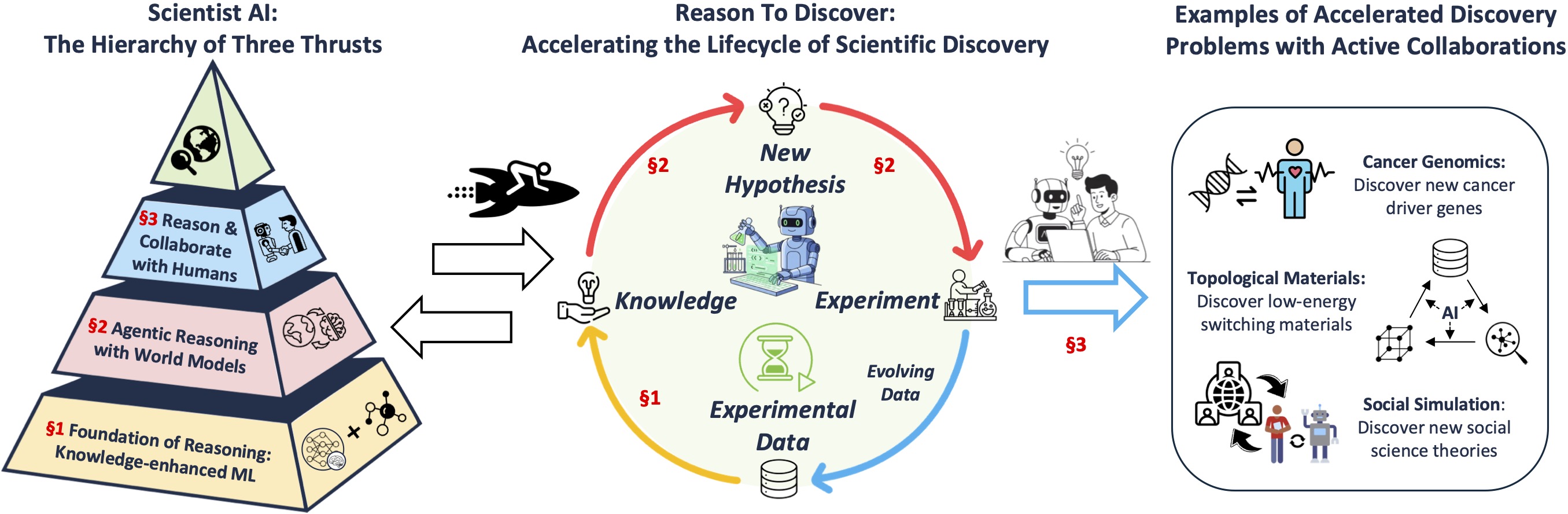

I am interested in building the foundations of Scientist-AI–AI systems that learn and reason to discover at the scientist-level efficiency and extrapolation, reliability, and collaborate with humans across interdisciplinary and science domains.

To this end, my research seeks to answer fundamental questions like How do models represent knowledge for discovery? How do they reason and plan to extrapolate? How do they reliably collaborate and co-discover with humans? My philosophy centers on bringing new perspectives to shift existing paradigms or unifying seemingly different paradigms–exemplified by LLM Reasoners (reasoning as planning), PromptAgent (prompting as search), ToolkenGPT (tools as tokens), or NeurIPS 2025's LAW 2025 workshop (Bridging Language, Agent, and World Models).

I earned my PhD in the Computer Science department at The Ohio State University, advised by Prof. Huan Sun, where I developed foundational frameworks for knowledge-centric AI systems. My work has been supported by and recognized with the Gordon and Betty Moore Foundation Fellowship, OpenAI Research Grant, Rising Star in Data Science (UChicago), Best Paper Award at SoCal NLP, Amazon Alexa Prize, etc. I collaborate with MIT-IBM Lab, Microsoft Research, NEC Labs, and academic institutions including CMU, MBZUAI, and multiple UC campuses & national labs through the UC-LEAP project.

Research Overview View Details →

Scientist AI sits at the emerging intersection of advanced AI reasoning and accelerated discovery across science and society. This space extends traditional data-driven discovery, calling for better AI systems (more scientist-like) that actively explore hypotheses, simulate possibilities, and generate new, verifiable knowledge across disciplines.

Selected Honors

- Gordon and Betty Moore Foundation Fellowship 2025

- OpenAI Research Grant Award One of 11 teams selected worldwide 2024

- Rising Star in Data Science, University of Chicago 2022

- Best Paper Award, SoCal NLP Symposium 2023

- Amazon Alexa Prize Winner, TaskBot Challenge (3rd Place) 2022

- Graduate Research Award (Mike Liu Scholarship), OSU 2022

News

Research Highlights Full List on Google Scholar →

Algorithmic Innovation

- Reasoning and planning: RAP (EMNLP 2023), LLM Reasoners (COLM 2024), ThinkSum (ACL 2023)

- Discrete optimization: PromptAgent (ICLR 2024), DRPO (EMNLP 2024)

- Efficient ML: Multitask Prompt Tuning (ICLR 2023), Self-MoE (ICLR 2025)

- Tool learning: ToolkenGPT (NeurIPS 2023 Oral)

- Knowledge extraction with weak supervisions: SurfCon (KDD 2019), X-MedRELA (ACL 2020)

Impact in Science

- AI for biomedical science: Nature 2025, MutationProjector (the first cancer genomics foundation model for tumor mutation profiles), scPilot (NeurIPS 2025), BioNEV (Bioinformatics 2019)

- AI for material science: UC-LEAP (Discovering Low-Energy, AI-Informed Phase Transitions Materials)

- AI for social science: Computational social simulation(DeepPersona, NeurIPS 2025), TacoBot (Alexa Prize 2021)

Open-Source Infrastructure

- Reasoning library: LLM Reasoners (2.3k+ GitHub stars), PromptAgent (300+ GitHub stars)

- Model evaluation: Decentralized Arena, FIRE-Bench (Reliably Benchmarking Scientific (Re-)Discovery)

- Pre-training data: TxT360 (58K downloads last month, globally deduplicated corpus across 99 CommonCrawl snapshots)

Visit my Publications page for the complete list of papers and research contributions.